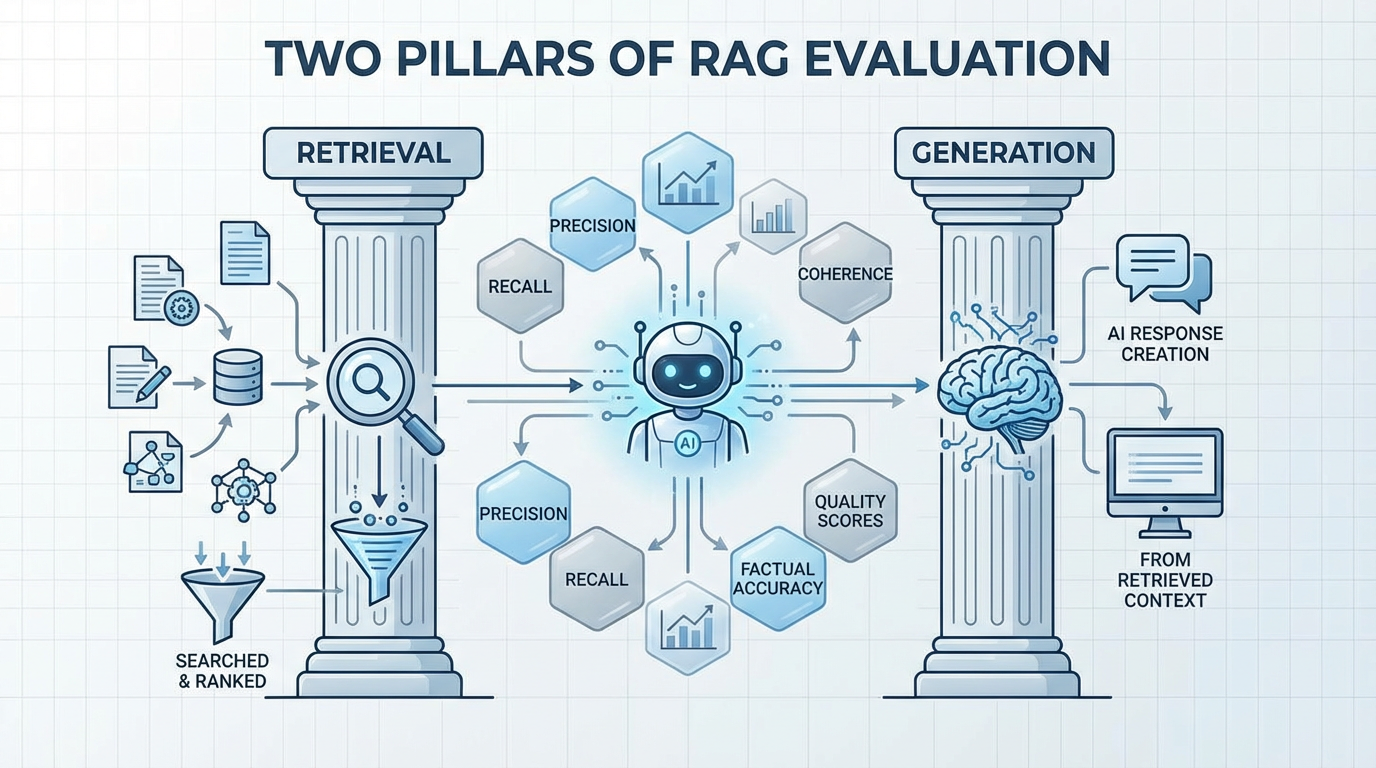

The Two Pillars of AI Agent Evaluation: Mastering Retrieval and Generation for Reliable RAG Systems

Discover the essential framework for evaluating RAG (Retrieval-Augmented Generation) agents through two critical pillars: retrieval accuracy and generation quality. Learn how to build reliable AI systems that deliver consistent, trustworthy results.

The Critical Question: How Do You Know Your AI Agent Actually Works?

You've built an AI agent. It seems to work in testing. Users are starting to interact with it. But here's the million-dollar question: How do you know if your AI agent is actually working well?

This isn't just an academic concern. In production environments, AI agents make decisions that affect real users, real business processes, and real outcomes. A poorly performing agent doesn't just deliver bad results—it erodes trust in AI systems and can cause significant business damage.

The challenge is that AI agent evaluation isn't a single metric or simple test. For Retrieval-Augmented Generation (RAG) agents—the backbone of most enterprise AI applications—evaluation requires understanding and measuring two distinct but interconnected processes.

Understanding RAG: The Foundation of Modern AI Agents

Before diving into evaluation, it's crucial to understand what makes RAG agents different from simple language models.

Traditional Language Model Limitations

- Knowledge cutoff: Only knows information from training data

- Hallucination risk: May generate plausible but incorrect information

- Static knowledge: Cannot access real-time or company-specific information

- Limited context: Restricted by token limits and training scope

RAG Agent Advantages

- Dynamic knowledge access: Retrieves relevant information from current databases

- Grounded responses: Answers based on specific, retrievable documents

- Company-specific expertise: Can access proprietary knowledge bases

- Reduced hallucination: Responses anchored to actual source material

The Two-Stage Process

- Retrieval Stage: Find relevant documents/information from knowledge base

- Generation Stage: Use retrieved information to generate accurate, helpful responses

This two-stage process is exactly why RAG evaluation requires a two-pillar approach.

Pillar 1: The Retrieval Phase - "Did We Find the Right Information?"

The first pillar of RAG evaluation focuses on a fundamental question: Did the agent retrieve the correct source documents or context?

Why Retrieval Quality Matters

Garbage In, Garbage Out: If the agent retrieves irrelevant or incorrect information, the final answer will be wrong, regardless of how sophisticated the language model is.

Consider this example:

- User Question: "What is our company's policy on remote work?"

- Poor Retrieval: Returns documents about office space planning and equipment policies

- Result: Even the best language model will generate an irrelevant or incorrect answer

Key Retrieval Metrics

1. Relevance Scoring

Metric: How relevant are the retrieved documents to the user's question? Measurement:

- Manual evaluation by domain experts

- Automated relevance scoring using semantic similarity

- User feedback on result usefulness

Example Evaluation:

Question: "How do I reset my password?"

Retrieved Documents:

- Doc 1: Password reset procedures (Relevance: 10/10)

- Doc 2: Account creation guide (Relevance: 3/10)

- Doc 3: Security best practices (Relevance: 6/10)

Average Relevance Score: 6.3/10

2. Recall Rate

Metric: Did the system retrieve all relevant documents available? Measurement: Percentage of relevant documents successfully retrieved

Example:

- Total relevant documents in knowledge base: 5

- Documents retrieved by system: 3

- Recall Rate: 60%

3. Precision Rate

Metric: Of the documents retrieved, how many were actually relevant? Measurement: Percentage of retrieved documents that are relevant

Example:

- Documents retrieved: 10

- Relevant documents among those retrieved: 7

- Precision Rate: 70%

4. Ranking Quality

Metric: Are the most relevant documents ranked highest? Measurement: Position of highly relevant documents in retrieval results

Common Retrieval Problems and Solutions

Problem 1: Semantic Mismatch

Issue: User asks about "PTO" but documents use "Paid Time Off" Solution: Implement synonym expansion and semantic search improvements

Problem 2: Context Loss

Issue: Retrieving individual paragraphs that lose important context Solution: Retrieve larger document chunks or implement context-aware chunking

Problem 3: Outdated Information

Issue: Retrieving old policy documents instead of current versions Solution: Implement document versioning and freshness scoring

Pillar 2: The Generation Phase - "Is the Answer Actually Good?"

The second pillar evaluates whether the language model generates high-quality responses based on the retrieved information.

Critical Generation Quality Dimensions

1. Groundedness

Question: Is the answer based on the retrieved context? Evaluation: Does the response contain information not found in the source documents?

Example Evaluation:

Retrieved Context: "Company policy allows 3 weeks vacation for employees with 2+ years tenure"

Generated Response: "You can take 3 weeks vacation after 2 years with the company"

Groundedness Score: 10/10 (Fully grounded in source material)

vs.

Generated Response: "You can take 4 weeks vacation and it includes sick days"

Groundedness Score: 2/10 (Contains information not in context)

2. Completeness

Question: Does the answer fully address the user's question? Evaluation: Are all aspects of the question answered?

3. Accuracy

Question: Is the information factually correct? Evaluation: Comparison against known correct answers or expert validation

4. Clarity and Usefulness

Question: Is the answer clear and actionable for the user? Evaluation: User feedback and comprehension testing

Advanced Generation Evaluation Techniques

1. LLM-as-Judge Evaluation

Method: Use another large language model to assess response quality Implementation:

Evaluation Prompt: "Given this context and user question, rate the response on:

1. Accuracy (1-10)

2. Completeness (1-10)

3. Clarity (1-10)

4. Groundedness (1-10)

Context: [Retrieved documents]

Question: [User question]

Response: [Generated answer]

2. Human Expert Validation

Method: Domain experts evaluate responses for accuracy and usefulness Implementation: Regular sampling of responses for expert review

3. User Feedback Integration

Method: Collect and analyze user ratings and feedback Metrics: Thumbs up/down, detailed feedback, task completion rates

Implementing a Comprehensive RAG Evaluation Framework

Phase 1: Baseline Establishment

Create Evaluation Datasets

- Golden Question Set: Curated questions with known correct answers

- Edge Case Collection: Challenging questions that test system limits

- Real User Queries: Sample of actual user interactions

Establish Evaluation Metrics

- Retrieval Metrics: Precision, recall, relevance scoring

- Generation Metrics: Groundedness, accuracy, completeness, clarity

- End-to-End Metrics: User satisfaction, task completion rates

Phase 2: Automated Evaluation Pipeline

Retrieval Evaluation Automation

def evaluate_retrieval(question, retrieved_docs, ground_truth_docs):

# Calculate precision

relevant_retrieved = set(retrieved_docs) & set(ground_truth_docs)

precision = len(relevant_retrieved) / len(retrieved_docs)

# Calculate recall

recall = len(relevant_retrieved) / len(ground_truth_docs)

# Calculate F1 score

f1 = 2 * (precision * recall) / (precision + recall)

return {

'precision': precision,

'recall': recall,

'f1_score': f1

}

Generation Evaluation Automation

def evaluate_generation(context, question, response, ground_truth):

# Use LLM-as-judge for groundedness

groundedness_score = llm_judge_groundedness(context, response)

# Compare against ground truth for accuracy

accuracy_score = semantic_similarity(response, ground_truth)

# Evaluate completeness

completeness_score = llm_judge_completeness(question, response)

return {

'groundedness': groundedness_score,

'accuracy': accuracy_score,

'completeness': completeness_score

}

Phase 3: Continuous Monitoring and Improvement

Real-Time Quality Monitoring

- Response Quality Tracking: Monitor generation metrics in production

- Retrieval Performance: Track retrieval success rates and relevance scores

- User Satisfaction: Collect and analyze user feedback continuously

Iterative Improvement Process

- Weekly Evaluation Reviews: Analyze performance trends and identify issues

- Monthly Deep Dives: Comprehensive analysis of failure cases

- Quarterly System Updates: Implement improvements based on evaluation insights

Common Evaluation Pitfalls and How to Avoid Them

Pitfall 1: Over-Relying on Automated Metrics

Problem: Automated scores don't always reflect real user experience Solution: Balance automated evaluation with human assessment and user feedback

Pitfall 2: Ignoring Edge Cases

Problem: Focusing only on common queries while ignoring challenging scenarios Solution: Deliberately include difficult and edge-case questions in evaluation sets

Pitfall 3: Static Evaluation Sets

Problem: Using the same evaluation questions over time Solution: Regularly update evaluation sets with new real-world queries

Pitfall 4: Evaluation-Production Mismatch

Problem: Evaluation environment differs significantly from production Solution: Ensure evaluation closely mirrors production conditions and data

Measuring Business Impact: Beyond Technical Metrics

User-Centric Metrics

- Task Completion Rate: Percentage of user queries successfully resolved

- User Satisfaction Scores: Direct feedback on response quality and usefulness

- Time to Resolution: How quickly users find the information they need

- Return Usage Rate: How often users return to use the system

Business Impact Metrics

- Support Ticket Reduction: Decrease in human support requests

- Employee Productivity: Time saved through self-service capabilities

- Knowledge Accessibility: Improvement in finding company information

- Training Efficiency: Reduced time needed for employee onboarding

The Strategic Advantage of Rigorous Evaluation

Building Trust Through Transparency

Organizations with robust RAG evaluation processes can:

- Demonstrate reliability to stakeholders and users

- Identify and fix issues before they impact users

- Continuously improve system performance over time

- Make data-driven decisions about AI system investments

Competitive Differentiation

Companies that master RAG evaluation gain:

- Higher quality AI applications that users trust and rely on

- Faster iteration cycles through systematic improvement processes

- Better resource allocation by focusing on high-impact improvements

- Reduced risk of AI system failures and user dissatisfaction

Call to Action: Implement Your RAG Evaluation Framework Today

The technology exists. The methodology is proven. The competitive advantage is real.

Your 30-Day RAG Evaluation Implementation:

Week 1: Foundation Building

- Audit your current RAG system and identify evaluation gaps

- Create initial evaluation datasets with golden questions and ground truth answers

- Establish baseline metrics for both retrieval and generation quality

- Set up basic automated evaluation pipelines

Week 2: Comprehensive Assessment

- Implement LLM-as-judge evaluation for generation quality

- Develop retrieval evaluation metrics and automated scoring

- Conduct initial comprehensive evaluation of your RAG system

- Identify top improvement opportunities based on evaluation results

Week 3: Monitoring and Feedback Systems

- Implement real-time quality monitoring in production

- Set up user feedback collection and analysis systems

- Create alerting for quality degradation or system issues

- Establish regular evaluation review processes

Week 4: Optimization and Scaling

- Implement improvements based on evaluation insights

- Expand evaluation coverage to include edge cases and new scenarios

- Train team members on evaluation methodologies and tools

- Plan for continuous improvement and system evolution

The Evaluation Reality

Your users are already judging your AI agent's performance—with every interaction, every query, every response. The question isn't whether your system will be evaluated—it's whether you'll control and learn from that evaluation process.

Start today:

- Choose one critical use case for your RAG agent

- Create a golden question set with known correct answers

- Implement basic retrieval and generation evaluation metrics

- Measure current performance and identify improvement opportunities

- Establish a regular evaluation and improvement cycle

A successful RAG agent requires both high-quality retrieval and accurate, grounded generation. By understanding and measuring these two pillars, you can build AI systems that users trust, rely on, and value—turning AI from a experimental technology into a reliable business asset.

Ready to build bulletproof evaluation systems for your AI agents? Our AI Agents Workshop shows you exactly how to implement comprehensive RAG evaluation frameworks that ensure reliable, trustworthy AI performance. Learn to measure what matters and build systems that continuously improve over time.

Related Articles

Accelerating Feature Development with AI Agents: The Breakdown Approach That Transforms Software Delivery

Discover how AI agents can revolutionize software development by breaking down complex features into manageable, testable components, creating a more efficient and reliable development flow that delivers results faster.

AI for Instant Market Intelligence: The Competitive Analysis Revolution

Discover how AI agents can deliver comprehensive competitive analysis in minutes instead of weeks, transforming market intelligence from a quarterly event into a real-time strategic capability.

Automating Training and Onboarding: How AI Can Instantly Generate Step-by-Step Recaps

Discover how AI agents can solve the common problem of lost knowledge and inconsistent training materials by automatically generating comprehensive step-by-step guides from live sessions.